As the tech community eagerly anticipates NVIDIA’s next generation of graphics cards, rumors surrounding the eagerly awaited GeForce RTX 5090 and the TITAN AI have started to surface. Enthusiasts and professional users alike are on the edge of their seats with claims suggesting significant performance leaps over the current flagship RTX 4090. This article delves into the speculated advances and what they might mean for the future of graphics processing and artificial intelligence (AI) workloads.

The Anticipated Leap: GeForce RTX 5090

Sources indicate that the NVIDIA GeForce RTX 5090 is expected to deliver an astounding 48% speed boost over the current top-tier RTX 4090. This performance jump could set a new standard in gaming and professional graphic rendering, making the RTX 5090 a highly coveted upgrade.

Rumored specifications hint at improvements in several critical areas:

- CUDA Cores: Increased count, enhancing parallel processing capabilities.

- Clock Speeds: Higher base and boost clock speeds, translating to faster computations.

- Memory: Adoption of faster and more efficient GDDR7 memory, providing better bandwidth and reduced latency.

Together, these enhancements could revolutionize real-time ray tracing, texture rendering, and overall gaming experience, pushing the boundaries of what’s visually possible.

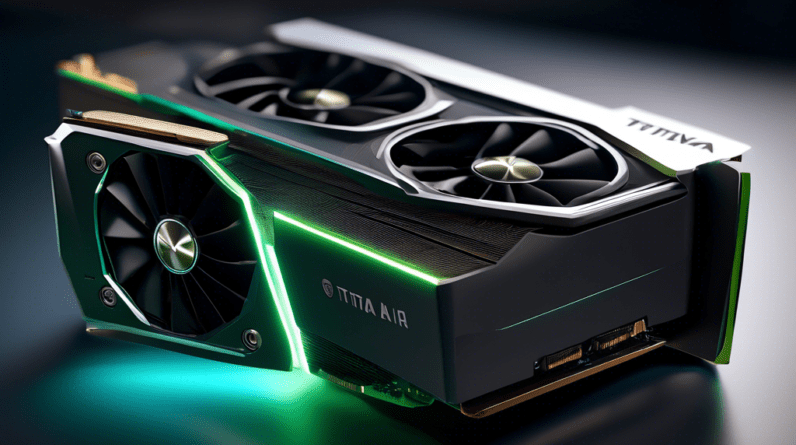

The TITAN AI: A New Benchmark

Equally, if not more exciting, are the rumors surrounding the TITAN AI, a card designed with machine learning and AI applications at its core. Reports suggest it could outperform the RTX 4090 by a staggering 63%, positioning it as a game-changer for researchers, data scientists, and developers.

Key innovations speculated for the TITAN AI include:

- Tensor Cores: More advanced tensor core architecture, optimized for faster AI model training and inference.

- Memory Configuration: Massive VRAM, potentially exceeding 48GB, to handle extensive datasets and complex simulations.

- Power Efficiency: Advanced power management for high performance with lower power consumption.

The impact of these enhancements extends beyond gaming, providing unprecedented computational power for AI research, deep learning, and neural network development.

Real-World Implications

While these rumors are yet to be confirmed, the potential advances signal significant real-world implications. For gamers, the GeForce RTX 5090 could provide smoother, higher-resolution experiences with minimal latency. For professionals in graphic design, video editing, and 3D modeling, it could mean faster rendering times and greater creative freedom.

The TITAN AI’s performance improvements could lead to breakthroughs in AI and machine learning, offering researchers the tools they need to push the envelope in autonomous driving, natural language processing, and advanced robotics.

Conclusion

The speculation surrounding NVIDIA’s GeForce RTX 5090 and TITAN AI paints a promising picture of the future of computing performance. With significant improvements over the existing RTX 4090, these next-generation cards could enable new heights in both gaming and professional applications. As always, it will be fascinating to see how these rumors unfold into concrete announcements and products, setting the stage for the next era of graphic and AI innovation.